We surround ourselves with more and more data, and thanks to cloud services like Azure, we have a wide range of services that support high data throughput and diverse data sources.

Over the years I’ve worked on several IoT projects where the goal was to ingest data from different systems, edge devices and industrial machines. Designing a solution for this kind of scenario requires careful considerations of available technologies that you can utilize.

Beyond just ingesting data, you also have to consider how the data should be processed, and how it should be stored and visualized – not only for your current needs, but also for future flexibility to be able to use the data for different tasks.

Ingestion

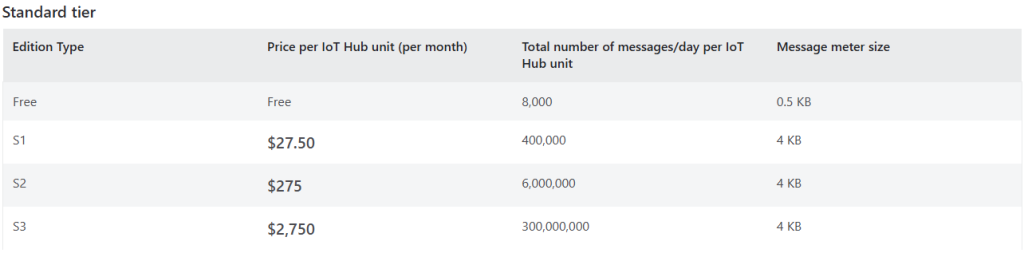

There are several approaches to ingest or capture data. In this post I will focus on two services, Azure IoT Hub and Azure Event Hub. The two services are similar, but have some key differences, and they can also be great together as well.

Azure IoT Hub

Azure IoT Hub is a managed service for messaging between the cloud and your devices. It’s great for managing IoT devices, and supports bi-directional communication. Under the hood IoT Hub is built on the same underlying event streaming concept as the Event Hub, but with a specialized layer on top for device management. IoT Hub provides an identity registry so that each device has it’s own unique identity which gives you control over your devices, which again leads to improved data integrity and trust.

When it comes to cloud-to-device messages, IoT Hub enables you to send commands to execute certain events on your devices like performing a reboot, or change device properties. Device properties are changed via the device twin, and it consists of desired properties and reported properties. For example, if you want to adjust the interval of how often data is sent from the device you can change a desired custom property, e.g messageInterval to your new value, and the device code will read this and try to apply this and report back through it’s reported properties.

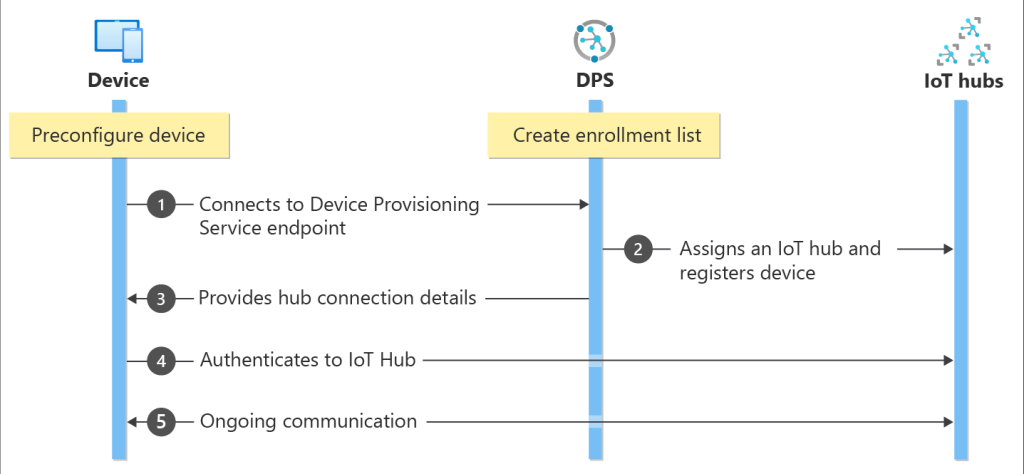

If you have large amounts of devices, IoT Hub also provides it’s own provisioning service which allows you to automatically provision new devices. When a new device connects to the Device Provisioning Service (DPS) it verifies the device against an enrollment list before onboarding the device. If you want to look into DPS go over to Quickstart – Azure IoT Hub Device Provisioning Service

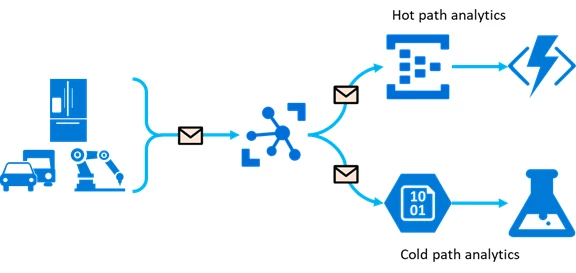

Another useful feature of IoT Hub that I want to mention is the ability to do message routing. By default, all data flows through a built-in endpoint, but you can create custom routes to send data to different destinations, such as Azure Storage, Service Bus, Cosmos DB, or Event Hub to name some.

This flexibility allows you to tailor your data flow to your specific needs. Let’s say you have a fleet management system, where GPS and engine diagnostics from vehicle are streamed into an IoT Hub. You could configure a route that sends location data to Cosmos DB, making it available for applications that display vehicle tracking, while other data like performance metrics to Azure Stream Analytics for anomaly detection.

Azure Event Hub

Contrary to the IoT Hub, the Event Hub does not care about devices and identities, which makes it an ideal service if you don’t have device management as part of your primary requirements.

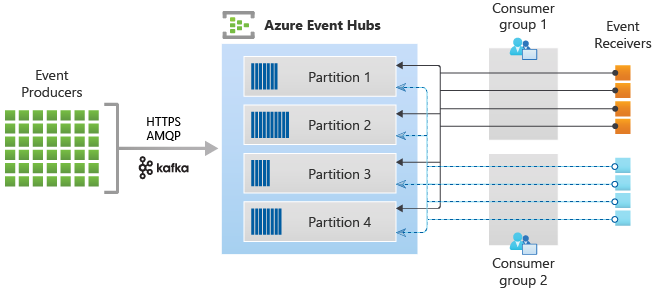

Event Hub is optimized for high-throughput messaging, and supports millions of events per second. By partitioning the incoming data and distributing it across different consumers, it allows us to run multiple pipelines to operate in parallel, making it a great solution for real-time analytics and event-driven architectures.

In many solutions where the IoT Hub serve as a secure entry point which communicates with the devices, the Event Hub can also come in as a useful event streaming mechanism after the data has been received from the edge. Message routing from the IoT Hub allows you to forward data to one or more Event Hubs, enabling different services to consume and process data independently.

Azure Event Hub is an excellent solution for high-speed data ingestion, whether as part of a larger architecture together with IoT Hub or as a standalone service.

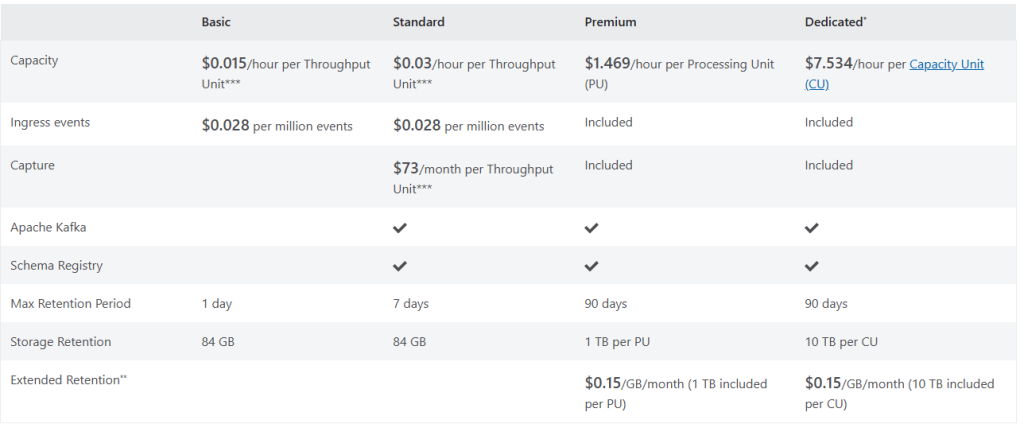

If you don’t have to deal with devices it is often more cost-effective compared to utilizing IoT Hub, especially when handling large volumes of data, as it does not require the additional overhead of device management and identity features.

There’s always another choice

In addition to these two services from Microsoft there are other potential choices when device management isn’t necessary, like Azure Service Bus which provides some great functionality when it comes to robust message handling, Azure Data Factory for direct access to external databases and APIs, and even Azure Functions with the appropriate trigger might be well suited for some particular use cases.

Processing and storage

How the incoming data are processed, is highly use case dependent as the different services in Azure provide different functionality, and there are lots of different ways to process your data. I will go into a couple key services which I have experiences with in the past.

Azure Stream Analytics

For real-time processing of streaming data, Azure Stream Analytics is a great choice. It provides a SQL-like query language, and it can detect anomalies with lots of different useful built-in query functions. I especially found the different windowing functions to be super nice when detecting variances.

In addition to the different query functionalities there are built-in integrations so that the output queries can easily store the processed data in it’s final data source. The last time I used this service there was a bit of restrictions on which services was able to use as outputs, but the list is growing and really nice to see that Azure Database for PostgreSQL is supported now (it wasn’t when I needed it).

Azure Functions

Functions are a good way to process your data if you need custom transformations and handling of your data. With the native IoT Hub and Event Hub triggers you can process events as they arrive, allowing you to run custom transformations with your favorite programming language.

Personally, I’ve used Functions in this context when working with PostgreSQL, where native integrations were lacking. It provided the flexibility I needed for transforming and storing data, while also being cost-efficient since you only pay for execution time.

Azure Data Factory

For batch processing and complex data pipelines, Azure Data Factory (ADF) is another strong tool. It comes with a large set of built-in connectors, allowing you to move data from IoT Hub, Blob Storage, or external data sources into structured storage or analytics platforms. With all of the work that is being done on Microsoft Fabric these days, I guess there is still a lot to come.

Storage

Choosing the right storage can sometimes be tricky. You have to know what the data is supposed to be used for to pick the best storage service. If you are a bit uncertain it is important to go for one which can give you what to need later on. That means storing as much as you can in a format that can be batch processed later on by some service.

Storing your data as files in a file storage like blob storage or data lake can be a good option as it’s cost effective, and enables you to process the files with lots of different approaches later on. If you are planning to do machine learning or other analysis work in Azure Data Explorer having the raw data is important.

If you have concrete use cases, e.g. an application that needs to have data in a structured format, and be able to do queries on particular data, then Azure SQL is probably something you would look into – alternatively other relationship databases. I prefer the combination of a robust PostgreSQL database with time series capabilities from plugins like TimescaleDB.

Document DBs like Azure Cosmos DB is also great choice if you are handling large amounts of data, and want to use them in your application with low latency. Cosmos DB preserve speed even when the data size grows, which makes this a good fit for both applications and other needs, its global distribution is also something to consider if you are working on a global solution.

Summary

When working with IoT solutions there are several considerations, you have to think through both how you want to ingest, process and store the information in the best possible manner to create a solution that provides a robust architecture with enough flexibility to grow with the future data driven world.